InExcludeData modifying in the ParalelFor?

-

Hello.

Basically my code looks like:

- (in the Main Thread ) Allocate all the BaseObjects, add it to a vector-list (I will add all of it into the scene later).

- ParalelFor worker for sorting all objects and setting its parameters

- Back to MainThread: InsertObject() for all objects into the doc.

But inside the p.3 I also need to perform a lot of myObjectDataPluginInExData->InsertObject(myObjs.at(i)) operations. But the problem: it really slows down the 3rd step.

Therefore, I considered the possibility of transferring all myInExData-modification to the step-2. To perform it in the threaded mode. The idea is do not modify the scene, just a tempInExData variable.

I was thinking about 1) create the instance of the InExdata not binded to any scene object, 2) inside ParalelFor make all

myTempInExData->InsertObject(myObjects.at(i))and 3) in the Main Thread assign myTempInExData to myInExData of my plugin.

Is it possible in a some way? Is it possible to modify the myTempInExData in the Paralelfor at all?

-

Hello @yaya,

Thank you for reaching out to us.

[...] Allocate all the BaseObjects, add it to a vector-list [...]

You should not use

std::vectorbut one of our collection types, e.g.,BaseArray, but that is only a minor issue unrelated to your core problem.The problem with your question is that you provide no code and no context, which makes it extremely hard to answer it in a meaningful way.

- How many objects are in your

std::vector? When there are less than 250 objects, chances are that usingParallelForwill yield no speed improvements or even make things slightly slower. - You say that your third step is really slow. How did you assess that? Are you sure that the execution of the third step is taking so long, and not update, cache, and scene redraw events carried out by the Cinema 4D core after you have added possibly a very large amount of objects to the scene?

The direct answer to your question is: No,

InExlcudeData->InsertObjectcannot be parallelized in a meaningful manner. The type makes internally a copy of its data on modification, and is therefore thread-safe in an abstract sense, but you still must manage access to it as otherwise data will be lost. Otherwise something like this will happen:InExcludeData data; InsertObject:Thread I - InExcludeData localCopy1 = copy(data); InsertObject:Thread I - Starts modifying localCopy1 ... InsertObject:Thread II - InExcludeData localCopy2 = copy(data); InsertObject:Thread II - Starts modifying localCopy2 ... InsertObject:Thread I - Finished InsertObject:Thread I - data = localCopy1; InsertObject:Thread II - Finished InsertObject:Thread II - data = localCopy2;I.e., the modifications of Thread I contained in

localCopy1are lost when Thread II writes its local copy back.This is all however very theoretical and technical without a good reason. Populating

InExcludeDataand writing parameters to an object should not take that long, the real bottleneck is more likely Cinema 4D crunching on your data once you have added your objects to the active document. When you are adding a lot of objects to a scene, Cinema might have to build a lot of caches which can take some time. Without code and a concrete description of what you want to accomplish, it is impossible for us to give you here any good advice.Cheers,

Ferdinand - How many objects are in your

-

Hi @ferdinand

You've understood everything perfectly correct.

Yes, I want to add a lot of objects in the scene,

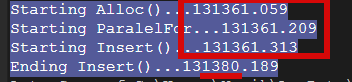

Yes, when its number is 250, it is not the problem at all. The talking about case when the number is 3000.// The function is called from the ObjectDataPlugin Messages. doc->StartUndo(); // Alloc() all // MainThread maxon::TimeValue timer = maxon::TimeValue::GetTime(); ApplicationOutput("Starting Alloc()...@", timer.GetSeconds()); for (Int32 i = 0; i <= amount; ++i) // 3000 { BaseObject* const joint = BaseObject::Alloc(Osphere); BaseObject* const cyl = BaseObject::Alloc(Ocylinder); BaseObject* const connector = BaseObject::Alloc(180000011); BaseObject* const upV = BaseObject::Alloc(Onull); BaseTag* const DBtag = BaseTag::Alloc(180000102); BaseTag* const Constraint1 = BaseTag::Alloc(1019364); BaseTag* const Constraint2 = BaseTag::Alloc(1019364); joints.push_back(joint); cylinders.push_back(cyl); connectors.push_back(connector); UpVs.push_back(upV); DBtags.push_back(DBtag); constTags1.push_back(Constraint1); constTags2.push_back(Constraint2); } // ParallelFor. Sort, set params timer = maxon::TimeValue::GetTime(); ApplicationOutput("Starting ParallelFor...@", timer.GetSeconds()); // Worker lambda auto worker = [amount, modifyOnly, &controller1, &controller2, ...etc ](maxon::Int32 i) { { joints.at(i)->SetParameter(ID_BASELIST_NAME, spline->GetName() + String::IntToString(i), DESCFLAGS_SET::NONE); joints.at(i)->SetParameter(...etc Vector firstpos = ... // calculate some points cylinders.at(i)->SetParameter(ID_BASELIST_NAME, spline->GetName() + String::IntToString(i), DESCFLAGS_SET::NONE); cylinders.at(i)->SetParameter(... if (.... some calculation) { joints.at(i)->SetMg(BuildLookAt(~controller1->GetMg() * firstpos, ~controller1->GetMg() * secondpos, joints.at(i)->GetMg().sqmat.v3)); } cylinders.at(i)->SetRelPos(Vector(0, GetDistance(firstpos, secondpos) / 2, 0)); ....etc.... // a lot of calculations and setParams, setpos, setMg. No insertion in the scene here. }; // Assync maxon::ParallelFor::Dynamic(0, amount+1, worker ); // works nice and fast // Now an easy but most slow part: objs insertion into the scene // Main thread. timer = maxon::TimeValue::GetTime(); ApplicationOutput("Starting Insert()...@", timer.GetSeconds()); for (int j = 0; j < joints.size(); j++) { // DB-class joints.at(j)->InsertTag(DBtags.at(j)); CreatedObjsList->InsertObject(DBtags.at(j), 0); AdditionalCreatdObjsList->InsertObject(DBtags.at(j), 0); // joints joints.at(j)->InsertUnder(controller2); SpecBonesField->InsertObject(joints.at(j), 0); /*doc->AddUndo(UNDOTYPE::NEWOBJ, joints.at(j));*/ // Cylinder cylinders.at(j)->InsertUnder(joints.at(j)); CreatedObjsList->InsertObject(joints.at(j), 0); CreatedObjsList->InsertObject(cylinders.at(j), 0); AdditionalCreatdObjsList->InsertObject(joints.at(j), 0); AdditionalCreatdObjsList->InsertObject(cylinders.at(j), 0); /*doc->AddUndo(UNDOTYPE::NEWOBJ, cylinders.at(j));*/ // Connector-class if (simple bool) { UpVs.at(j)->InsertUnderLast(parentNull); CreatedObjsList->InsertObject(UpVs.at(j), 0); AdditionalCreatdObjsList->InsertObject(UpVs.at(j), 0); /*doc->AddUndo(UNDOTYPE::NEWOBJ, UpVs.at(j));*/ connectors.at(j)->InsertTag(constTags1.at(j)); connectors.at(j)->InsertTag(constTags2.at(j)); connectors.at(j)->InsertUnderLast(parentNull); CreatedObjsList->InsertObject(connectors.at(j), 0); AdditionalCreatdObjsList->InsertObject(connectors.at(j), 0); /*doc->AddUndo(UNDOTYPE::NEWOBJ, connectors.at(j)); */ // tracer in-Ex tracerInEx->InsertObject(connectors.at(j), 0); } //doc->AddUndo(UNDOTYPE::NEWOBJ, DBtags.at(j)); } timer = maxon::TimeValue::GetTime(); ApplicationOutput("Ending Insert()...@", timer.GetSeconds()); doc->EndUndo();

If I exclude from the "slow part" everything about InExData, it works much faster. Even when the number of objects is really big (3000). I was thinking about the AddUndo(), that it causes the problem, but - no. When I exclude it too, it does not affect the speed of the "slow" step at all.

So my thoughts was exactly about the InExData causes the slowing down. -

And the result for the 3000 objects adding to the scene but with no InExData->InsertObject()

Starting Alloc()...133894.367

Starting ParalelFor...133894.477

Starting Insert()...133894.542

Ending Insert()...133894.551 -

Hey yaya,

that is indeed a bit surprising, or maybe not considering the copying thing. It would be good if you group up as many

InsertObjectcalls and then measure the time it takes to carry them all out per iteration ofj. As a background,InsertObjectis a quite straight forward method, it roughly looks like this mockup method:SomeArray<IncludeExcludeItem> items; // The items for the in-exclude Int32 itemCount; // The number of links in the IncludeExcludeData Bool IncludeExcludeData::InsertObject(BaseList2D* node, Int32 flags) { InternalDataType* copy = NewInternalDataThing<InternalDataType>(itemCount + 1) // Make a copy of the data. for (Int32 i = 0; i < itemCount; i++) { items[i].CopyTo(copy[i]) } // The new last item copy[elementCount].SetData() // Overwrite the internal data. items = copy; itemCount++; return true; }So, my prediction would be, that with each iteration of

jfor a loop that looks like this:maxon::BaseArray<maxon::TimeValue> timings; for (int j = 0; j < joints.size(); j++) { ... maxon::TimeValue t = maxon::TimeValue::GetTime(); // Block of InExcludeData::InsertObject calls a->InsertObject(stuff[j], 0) b->InsertObject(stuff[j], 0) ... n->InsertObject(stuff[j], 0) // Sort of measure the execution time block, system clocks are not the most reliable thing, and when // push comes to shove, you might have run this multiple times and average out the values when // this produces very noisy timings. timings.Append(maxon::TimeValue::GetTime() - t) iferr_return; }the

timingsvalues will get larger and larger, as you must copy more and more data.This would make technically sense, because all that copying in the copy loop of

InsertObjectis not the cheapest thing to do, and when you try to do this on a non-trivial scale, it could result in some hefty performance penalty. There is nothing you can do to circumvent this.But I am not fully convinced yet that

InExcludeDatais the sole culprit, because the time you measured seems a bit excessive for just copying some arrays, even when done 100's or 1000's of times. I would really measure the timings to be sure.Cheers,

Ferdinand -

Ok, so it looks like I should to give up of using ParallelFor for InExData. Because it is most expensive part and using ParallelFor for all other parts of code does not speed things up.

And actually as I understand it is pretty logical.

I have 2 InExData parameters in my plugin. So when I copying 3000 items into 2 InExData`s, plus adding 3000 objects into the scene, then the number of operations increases up to 9 000. -

Hello @yaya

And actually as I understand it is pretty logical.

I have 2 InExData parameters in my plugin. So when I copying 3000 items into 2 InExData`s, plus adding 3000 objects into the scene, then the number of operations increases up to 9 000.It depends a bit on what you consider an 'operation' in this context, but I would say your calculation is at least misleading. Due to the copying nature of

IncludeExcludeData::InsertObject, inserting objects is not a constant cost operation (other than your example implies). It scales linearly with the number of items already in the list (in practice probably even a little bit worse, because the copying itself calls a function which is non-constant).So, when you have a list with

5items and add one, it is5 + 1 = 6- you must copy over the five old items and then add the new one - operations. And adding 5 items in total to an empty list would be1 + 2 + 3 + 4 + 5 = 15operations. The term operations is a bit fuzzy here, and there is a lot of room for interpretation, but what I want to make clear is that adding an item to anInExcludeDatais not a constant operation. So, adding 3000 items to anInExcludeDatadoes not result in 9000 operations, but 4498500. From this also follows, that it is computationally advantageous to store items in a larger number of lists, e.g., adding to twoInExcludeData1500 items each, costs 1124250 operations per batch, i.e., 'only' 2248500 operations for all 3000 items.Splitting up the data is IMHO your best route of optimization.

InExcludeDatais also simply not intended for such a large number of objects being stored in them. And as stressed before: I would really test first ifInExcludeDatais indeed the culprit.::InsertObjecthas been designed in a way which does not mesh that well with what you want to do. But I do not really see it eating up 10 seconds, even for 3000 objects. I personally would expect the other calls in your loop to take up more time. But that is very hard to guesstimate from afar, and I might be wrong.Cheers,

Ferdinand