Hey @SweepingMotion,

first of all, I did not want to discourage you as such this would be only possible in C++. All I am saying here is that having points in a mesh which look up their neighbors and then do a possible iterative spreading algorithm per iteration sounds costly.

You can certainly do this in Python, you should just brace for really bad performance when you run this on complex meshes with complex settings. That I used the modelling command for the growing part was not only based in my laziness but also the fact that the modelling command will run in C++ nice and fast.

Your Problem

Regarding your problem, I think I understand what you want. You want a field to (persistently) paint a vertex map to use this as a seed to run your simulation; think of a paint brush (the field) on canvas and the paint then spreading (the simulation). First of all, fields are not really meant to be persistent in that manner as that would be required here. Or to use your example, you would want your ballon tearing to continue even when the field on the tip of the needle is not touching any ballon vertex anymore after the ballon started tearing appart.

Fields are also not meant to do these whole field space computations a simulation implies. I.e., you get the point x and the field sampler spit out values for its weight and vector. A field modifier layer would be better suited for running a simulation as they focus more on all values in a field. The problem is that modifiers do not do the sampling part for a transform with a falloff. So you would kind of need both.

One could certainly do all this when motivated enough. You could for example simply precompute all values and then sample from a look-up table/cache when your field is sampled. But this all seems very complicated for no good reason.

Recommendation

I would still recommend using at least a Python TagData plugin. C++ would be better, using a Programming tag also works, but you must be hackier, be more knowledgeable to make it work.

There are two ways how you could realize the field/brush influence thing you want:

Ignore Mograph fields altogether. Here you would implement your own ObjectData plugin which does the painting. You would have two parts, the object(s) which are continuously painting sees into a vertex map, and your spider-in-the-net tag which then runs a simulation on that map. You would only need one vertex map in this case but would also have to reimplement falloffs and noise for the fields/brushes to the degree you need it.

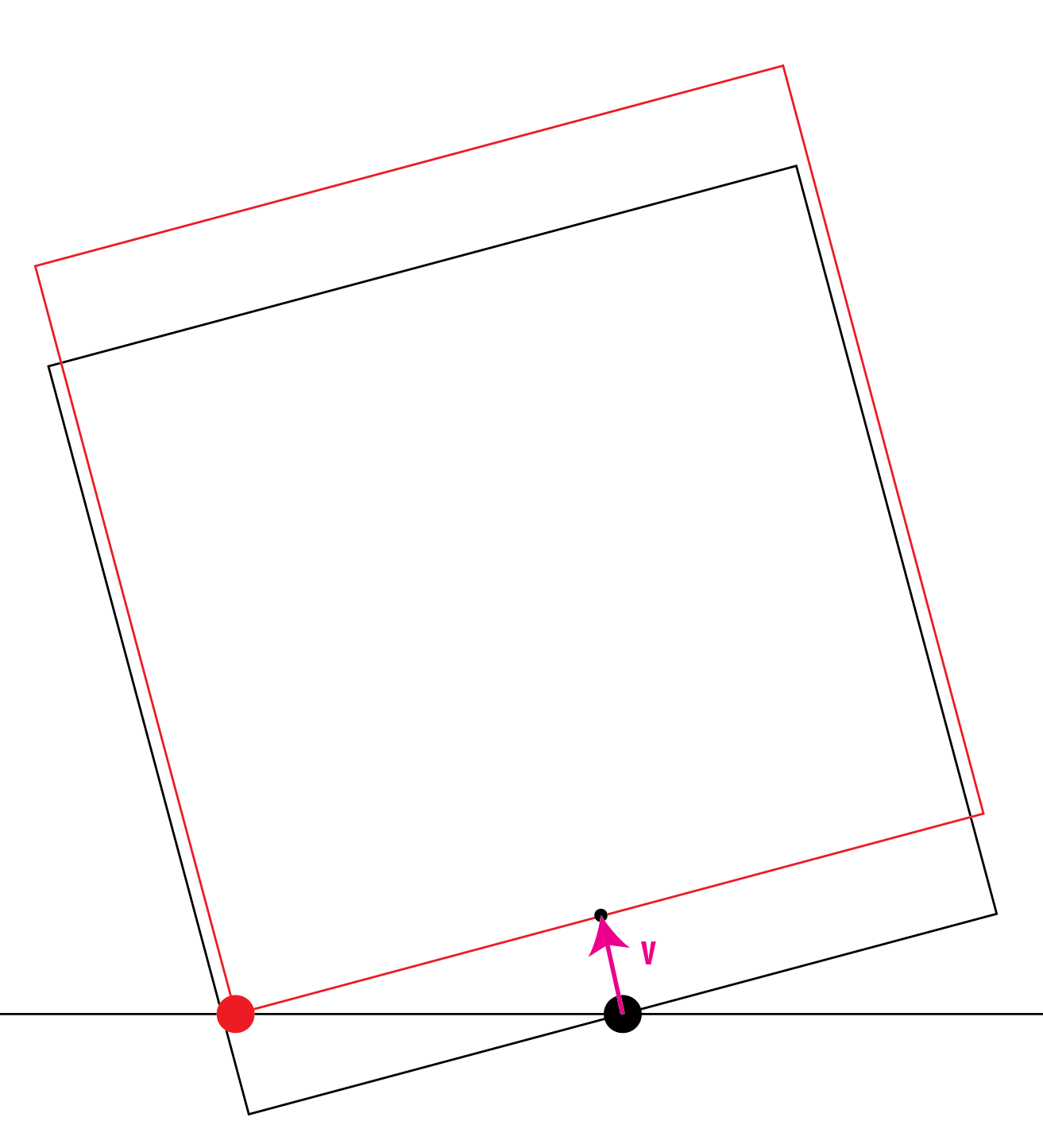

You use Mograph fields but you do not mix them with the simulation directly. The idea is to have two vertex maps. One seed vertex map is driven by MoGraph fields and you can go ham there, use all of MoMgraph to your liking. Your plugin tag would then copy from that seed map into a simulation map on each iteration and advance the simulation. For example by either blending values or taking the max value of both maps. On that other map you then run the simulation. This would have the advantage that you have the temporal persistence for free (since you copy from a volatile seed map into the persistent simulation map). You would also side step the fact that you would have to somehow weave the simulation into fields. If you wanted then wanted to pretty this up, you could make it so that your tag has field list and for the user it looks like that there is only the one output vertex map while the tag uses internally a hidden vertex map into which it feed the field list which is part of its UI.

Number two is in my opinion the more sensible solution, number one would give you more freedom but would also be much more work.

Cheers,

Ferdinand